基础资源:3台master主机,一台node主机

系统版本:Ubuntu 24.04

软件版本:containerd 1.7.12,kubernetes v1.31.2

基础配置 配置时间同步

1 2 3 4 apt -y install chrony systemctl enable chrony && systemctl start chrony chronyc sources -v timedatectl set-timezone Asia/Shanghai

配置主机名解析

1 2 3 4 5 6 7 8 hostnamectl set-hostname k8s-master-01 cat << EOF | tee /etc/hosts 172.16.0.253 vip.cluster.local 172.16.0.250 k8s-master-01 172.16.0.251 k8s-master-02 172.16.0.252 k8s-master-03 EOF

配置免密登录

1 2 3 4 5 ssh-keygen ssh-copy-id k8s-master-01 ssh-copy-id k8s-master-02 ssh-copy-id k8s-master-03 ssh-copy-id k8s-node-01

关闭交换空间

1 swapoff -a && sudo sed -i '/swap/s/^/#/' /etc/fstab

禁用防火墙

添加ipvs内核依赖配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 apt update && apt install -y ipset ipvsadm cat > /etc/modules-load.d/ipvs.conf <<EOF ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh EOF modprobe ip_vs modprobe ip_vs_rr modprobe ip_vs_wrr modprobe ip_vs_sh lsmod | grep -e ip_vs -e nf_conntrack ip_vs_sh 16384 0 ip_vs_wrr 16384 0 ip_vs_rr 16384 0 ip_vs 212992 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr nf_conntrack 204800 1 ip_vs nf_defrag_ipv6 24576 2 nf_conntrack,ip_vs nf_defrag_ipv4 16384 1 nf_conntrack libcrc32c 16384 5 nf_conntrack,btrfs,xfs,raid456,ip_vs

添加containerd的内核依赖配置

1 2 3 4 5 6 7 8 cat << EOF > /etc/modules-load.d/containerd.conf overlay br_netfilter EOF modprobe overlay modprobe br_netfilter

添加99-kubernetes-cri.conf配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 cat << EOF > /etc/sysctl.d/99-kubernetes-cri.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 user.max_user_namespaces=28633 vm.swappiness=0 EOF sysctl -p /etc/sysctl.d/99-kubernetes-cri.conf sysctl --system lsmod | grep br_netfilter lsmod | grep overlay sysctl net.bridge.bridge-nf-call-iptables net.bridge.bridge-nf-call-ip6tables net.ipv4.ip_forward

部署容器运行时 安装containerd

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 apt install -y containerd mkdir -p /etc/containerd && containerd config default > /etc/containerd/config.tomlvim /etc/containerd/config.toml SystemdCgroup = true sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9" config_path = "/etc/containerd/certs.d" mkdir -p /etc/containerd/certs.d/docker.iocat > /etc/containerd/certs.d/docker.io/hosts.toml <<EOF server = "https://docker.io" [host."https://dockerproxy.com"] capabilities = ["pull", "resolve"] [host."https://docker.m.daocloud.io"] capabilities = ["pull", "resolve"] [host."https://docker.agsv.top"] capabilities = ["pull", "resolve"] [host."https://registry.docker-cn.com"] capabilities = ["pull", "resolve"] EOF systemctl daemon-reload systemctl enable containerd --now systemctl status containerd

安装runc (可选)

1 2 3 4 wget https://3dview-1251252938.cos.ap-shanghai.myqcloud.com/dengjinjun/software/runc.amd64 install -m 755 runc.amd64 /usr/local/sbin/runc

安装crictl工具 (可选)

1 2 3 4 5 6 7 8 9 10 11 12 13 wget https://3dview-1251252938.cos.ap-shanghai.myqcloud.com/dengjinjun/software/crictl-v1.28.0-linux-amd64.tar.gz tar -zxvf crictl-v1.28.0-linux-amd64.tar.gz install -m 755 crictl /usr/local/bin/crictl crictl --runtime-endpoint=unix:///run/containerd/containerd.sock version Version: 0.1.0 RuntimeName: containerd RuntimeVersion: v1.7.3 RuntimeApiVersion: v1

安装nerdctl工具 (可选)

1 2 3 4 5 6 7 8 9 10 wget https://3dview-1251252938.cos.ap-shanghai.myqcloud.com/dengjinjun/software/nerdctl-1.7.4-linux-amd64.tar.gz tar xfv nerdctl-1.7.4-linux-amd64.tar.gz -C /usr/bin/ nerdctl version mkdir -p /etc/nerdctlcat > /etc/nerdctl/nerdctl.toml <<EOF namespace = "k8s.io" debug = false debug_full = false insecure_registry = true EOF

部署负载均衡环境 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 apt install keepalived haproxy -y cat > /etc/haproxy/haproxy.cfg <<EOF global log /dev/log local0 log /dev/log local1 notice chroot /var/lib/haproxy stats socket /run/haproxy/admin.sock mode 660 level admin stats timeout 30s user haproxy group haproxy daemon # Default SSL material locations ca-base /etc/ssl/certs crt-base /etc/ssl/private # See: https://ssl-config.mozilla.org/#server=haproxy&server-version=2.0.3&config=intermediate ssl-default-bind-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384 ssl-default-bind-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256 ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets defaults log global mode http option httplog option dontlognull timeout connect 5000 timeout client 50000 timeout server 50000 errorfile 400 /etc/haproxy/errors/400.http errorfile 403 /etc/haproxy/errors/403.http errorfile 408 /etc/haproxy/errors/408.http errorfile 500 /etc/haproxy/errors/500.http errorfile 502 /etc/haproxy/errors/502.http errorfile 503 /etc/haproxy/errors/503.http errorfile 504 /etc/haproxy/errors/504.http #--------------------------------------------------------------------- # apiserver frontend which proxys to the control plane nodes #--------------------------------------------------------------------- frontend apiserver bind *:16443 mode tcp option tcplog default_backend apiserverbackend #--------------------------------------------------------------------- # round robin balancing for apiserver #--------------------------------------------------------------------- backend apiserverbackend option httpchk http-check connect ssl http-check send meth GET uri /healthz http-check expect status 200 mode tcp balance roundrobin server master1 192.168.0.51:6443 check verify none server master2 192.168.0.52:6443 check verify none server master3 192.168.0.53:6443 check verify none EOF touch /etc/keepalived/keepalived.confcat > /etc/keepalived/keepalived.conf <<EOF ! Configuration File for keepalived global_defs { router_id LVS_DEVEL } vrrp_script check_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 3 weight -2 fall 10 rise 2 } vrrp_instance VI_1 { state MASTER # master1为MASTER,其他两台为BACKUP interface ens33 # 网卡名称 virtual_router_id 51 priority 101 # 优先级,master1为101,其他两台为100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.0.50 # 虚ip,保持一致 } track_script { check_apiserver } } EOF cat > /etc/keepalived/check_apiserver.sh <<EOF #!/bin/sh errorExit() { echo "*** $*" 1>&2 exit 1 } curl -sfk --max-time 2 https://localhost:16443/healthz -o /dev/null || errorExit "Error GET https://localhost:16443/healthz" EOF chmod +x /etc/keepalived/check_apiserver.shsystemctl restart haproxy keepalived systemctl enable haproxy keepalived

安装kubeadm 准备软件源,k8s 1.31.2版本

1 2 3 4 5 6 7 8 9 10 11 12 13 apt-get install -y apt-transport-https ca-certificates curl gpg mkdir -p /etc/apt/keyringscurl -fsSL https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.31/deb/Release.key | gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.31/deb/ /" | tee /etc/apt/sources.list.d/kubernetes.list apt-get update

k8s软件源,1.28.2 版本(备选)

1 2 3 4 5 6 7 8 9 10 apt-get install -y apt-transport-https ca-certificates curl gpg curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add - tee /etc/apt/sources.list.d/kubernetes.list <<-'EOF' deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main EOF apt-get update

安装软件包

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 apt install kubelet kubeadm kubectl echo 'export GNUTLS_CPUID_OVERRIDE=0x1' | sudo tee -a /etc/environmentapt-mark hold kubelet kubeadm kubectl systemctl enable kubelet.service apt install -y bash-completion source /usr/share/bash-completion/bash_completionecho 'source <(kubectl completion bash)' >>~/.bashrcecho 'source <(kubectl completion bash)' >>~/.bashrcsource ~/.bashrckubectl completion bash >/etc/bash_completion.d/kubectl echo 'alias kk=kubectl' >>~/.bashrcecho 'complete -F __start_kubectl kk' >>~/.bashrcsource ~/.bashrc

初始化集群 初始化之前重启一下containerd !!!

1 systemctl restart containerd

拉取镜像(可选)

如果直接初始化也会自动拉取,不过会拖慢初始化过程

1 kubeadm config images pull --kubernetes-version=v1.31.2 --image-repository registry.aliyuncs.com/google_containers

使用命令的方式初始化

1 2 3 4 5 6 7 kubeadm init \ --kubernetes-version=v1.31.2 \ --image-repository registry.aliyuncs.com/google_containers --v=5 \ --control-plane-endpoint vip.cluster.local:16443 \ --upload-certs \ --service-cidr=10.96.0.0/12 \ --pod-network-cidr=10.244.0.0/16

使用配置文件的方式(备选)

打印集群初始化默认的使用的配置

1 kubeadm config print init-defaults > init.default.yaml

根据这个配置自定义一个文件kubeadm.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 apiVersion: kubeadm.k8s.io/v1beta3 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 192.168 .0 .51 bindPort: 6443 nodeRegistration: criSocket: unix:///var/run/containerd/containerd.sock imagePullPolicy: IfNotPresent name: master1 taints: null --- apiServer: timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta3 controlPlaneEndpoint: vip.cluster.local:16443 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controllerManager: {}dns: {}etcd: local: dataDir: /var/lib/etcd imageRepository: registry.aliyuncs.com/google_containers kind: ClusterConfiguration kubernetesVersion: 1.28 .2 networking: dnsDomain: cluster.local podSubnet: 10.200 .0 .0 /16 serviceSubnet: 10.96 .0 .0 /12 scheduler: {}

指定yaml文件进行初始化

1 kubeadm init --config kubeadm.yaml

初始化成功

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME /.kube sudo cp -i /etc/kubernetes/admin.conf $HOME /.kube/config sudo chown $(id -u):$(id -g) $HOME /.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.226.4:6443 --token ejfu30.jwtm65sw7j49fsko \ --discovery-token-ca-cert-hash sha256:ce7825defa02d313f9fbdbad40e8ea930b20d6decde6d97b7320cfe3eaeed542

初始化失败

1 2 3 kubeadm reset -f rm -rf ~/.kube/ /etc/kubernetes/ /etc/cni /opt/cni /var/lib/etcd /var/etcd

错误排查参考

1 2 3 4 journalctl -xeu kubelet | grep Failed /var/lib/kubelet/config.yaml /etc/kubernetes/kubelet.conf 检查域名和vip的解析及是否可ping通

部署网络插件flannel 1 2 wget https://3dview-1251252938.cos.ap-shanghai.myqcloud.com/dengjinjun/software/kube-flannel.yml kubectl apply -f kube-flannel.yml

部署ingress-controller ingress-controller我是准备做高可用的,部署在node1和node2节点上,然后使用keepalived做一个虚拟IP实现高可用,但是生产意义不大,因为生产环境直接用云厂商的负载均衡应该就可以了,所以我这里只在node1上部署了ingress-controller

1 2 3 4 5 6 kubectl label node k8s-node1 node-role.kubernetes.io/edge= wget https://github.com/kubernetes/ingress-nginx/releases/download/helm-chart-4.10.1/ingress-nginx-4.10.1.tgz helm show values ingress-nginx-4.10.1.tgz

对values.yaml配置定制如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 controller: ingressClassResource: name: nginx enabled: true default: true controllerValue: "k8s.io/ingress-nginx" admissionWebhooks: enabled: false replicaCount: 1 image: registry: docker.io image: unreachableg/registry.k8s.io_ingress-nginx_controller imagePullPolicy: Never tag: "v1.9.5" digest: sha256:bdc54c3e73dcec374857456559ae5757e8920174483882b9e8ff1a9052f96a35 hostNetwork: true nodeSelector: node-role.kubernetes.io/edge: '' affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: app operator: In values: - nginx-ingress - key: component operator: In values: - controller topologyKey: kubernetes.io/hostname tolerations: - key: node-role.kubernetes.io/master operator: Exists effect: NoSchedule - key: node-role.kubernetes.io/master operator: Exists effect: PreferNoSchedule

副本数为1,被调度到k8s-node1节点上,使用宿主机网络。

1 2 3 4 5 6 crictl --runtime-endpoint=unix:///run/containerd/containerd.sock pull unreachableg/registry.k8s.io_ingress-nginx_controller:v1.9.5 helm install ingress-nginx ingress-nginx-4.10.1.tgz --create-namespace -n ingress-nginx -f values.yaml kubectl get po -n ingress-nginx

测试访问http://192.168.226.4返回默认的nginx 404页,则部署完成。

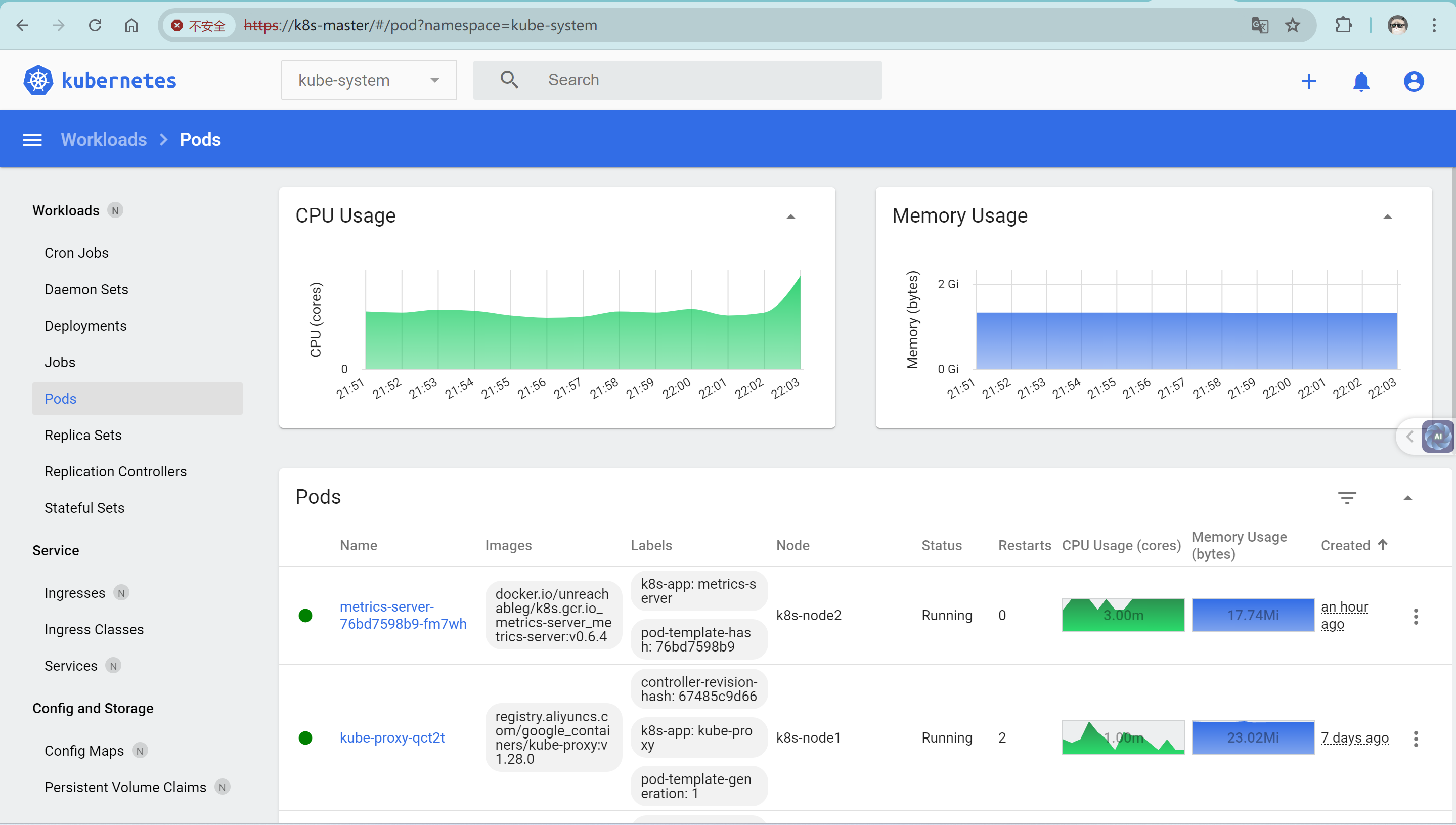

部署metrics-server 先部署一下metrics-server,metrics-server 是 Kubernetes 集群中的一个核心组件,用于收集集群中各个节点和容器的资源利用率数据(例如 CPU 和内存使用情况)。这些数据主要用于自动扩展和监控。

1 2 wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.4/components.yaml

把资源文件中的镜像修改为docker.io/unreachableg/k8s.gcr.io_metrics-server_metrics-server:v0.6.4,容器的启动参数,加入--kubelet-insecure-tls

1 2 3 4 kubectl apply -f components.yaml kubectl -n kube-system get pods

可以使用 kubectl top 命令来验证 metrics-server 是否正常工作:

1 2 3 4 5 kubectl top nodes kubectl top pods --all-namespaces

部署Dashboard 下载dashboard的yaml清单文件:

1 1wget https://raw.githubusercontent.com/kubernetes/dashboard/v3.0.0-alpha0/charts/kubernetes-dashboard.yaml

编辑kubernetes-dashboard.yaml清单文件,将其中的ingress中的host替换自己的域名:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 kind: Ingress apiVersion: networking.k8s.io/v1 metadata: name: kubernetes-dashboard namespace: kubernetes-dashboard labels: app.kubernetes.io/name: nginx-ingress app.kubernetes.io/part-of: kubernetes-dashboard annotations: nginx.ingress.kubernetes.io/ssl-redirect: "true" cert-manager.io/issuer: selfsigned spec: ingressClassName: nginx tls: - hosts: - localhost secretName: kubernetes-dashboard-certs rules: - host: k8s.example.com http: paths: - path: / pathType: Prefix backend: service: name: kubernetes-dashboard-web port: name: web - path: /api pathType: Prefix backend: service: name: kubernetes-dashboard-api port: name: api

提前在各个节点拉取镜像

1 2 3 ctr images pull docker.io/kubernetesui/dashboard-api:v1.0.0 ctr images pull docker.io/kubernetesui/metrics-scraper:v1.0.9 ctr images pull docker.io/kubernetesui/dashboard-web:v1.0.0

安装dashboard的yaml清单文件:

1 kubectl apply -f kubernetes-dashboard.yaml

确认dashboard的相关Pod启动正常:

1 2 3 4 5 6 7 8 9 1kubectl get po -n kubernetes-dashboard 2NAME READY STATUS RESTARTS AGE 3kubernetes-dashboard-api-8586787f7-vtszr 1/1 Running 0 60s 4kubernetes-dashboard-metrics-scraper-6959b784dc-c98tz 1/1 Running 0 59s 5kubernetes-dashboard-web-6b6d549b4-qsrsn 1/1 Running 0 60s 6 7kubectl get ingress -n kubernetes-dashboard 8NAME CLASS HOSTS ADDRESS PORTS AGE 9kubernetes-dashboard nginx k8s.example.com 80, 443 6m47s

创建管理员sa

1 2 3 4 kubectl create serviceaccount kube-dashboard-admin-sa -n kube-system kubectl create clusterrolebinding kube-dashboard-admin-sa \ --clusterrole=cluster-admin --serviceaccount=kube-system:kube-dashboard-admin-sa

创建集群管理员登录dashboard所需token:

1 2 3 kubectl create token kube-dashboard-admin-sa -n kube-system --duration=87600h eyJhbGciOiJSUzI1NiIsImtpZCI6IkFZN3I4cGxrd3VKc0NJek80Z3M0bUlsVEdjYnhnNUI3d3NJNkJmaXJpeEkifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoyMDM0NTA4Mzc3LCJpYXQiOjE3MTkxNDgzNzcsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJrdWJlLWRhc2hib2FyZC1hZG1pbi1zYSIsInVpZCI6ImM3MjA1Nzk0LWJjYTgtNDM1OC04ZTQ5LWU1MjE1NGRjYWViNyJ9fSwibmJmIjoxNzE5MTQ4Mzc3LCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06a3ViZS1kYXNoYm9hcmQtYWRtaW4tc2EifQ.bVeV50hgZYAB2m92kf_N-M0bMaQrB6aCToD8IZUo2go4BTbHDflSzS4s6l6oR6dwxC3qalex9Mhs-GbwLqcyZjU4aBDa9a4pWsOG8dUn24AO4dpXiKv2sBnoLlje4TC3AZPjcigs4Ngp7Ac2_g7Jv2PkO5Ke_GqKiJvv56SLdDagplPEaR82Pa8IEaZIlqzNhwlJFPXFW52f_kqVq54j2-xiLWx3r91gwPTnkbzXgv_AN-ud30iCtEO3NyPID6C7Vv5aEqUC5CIEOz019-Qj_7cW_KFByecEF6iI009ifqmKNMaUwvlI6T8TheXUqB50cyKrBj3O1UpDEYjsKpn2pA

使用上面的token登录k8s dashboard

工作节点配置 添加工作节点同样需要进行如上的几个步骤:

除此之外,还需要进行额外的配置

增大文件描述符数量限制

否则启动容器可能会出现类似报错:"System.IO.IOException: The configured user limit (128) on the number of inotify instances has been reached."

1 2 3 4 5 6 echo 8192 > /proc/sys/fs/inotify/max_user_instances echo fs.inotify.max_user_watches=65534 | tee -a /etc/sysctl.conf && sysctl -pecho fs.inotify.max_user_instances=65534 | tee -a /etc/sysctl.conf && sysctl -p

禁用apparmor管理runc

否则当apiserver调用kubelet删除容器时,命令行会卡住,容器一直处于terminating状态,describe相关pod,会发现类似报错:unknown error after kill: runc did not terminate successfully: exit status 1: unable to signal init: permission denied\n: unknown"

1 2 3 4 mkdir /etc/apparmor.d/disable ln -s /etc/apparmor.d/runc /etc/apparmor.d/disable/apparmor_parser -R /etc/apparmor.d/runc systemctl restart containerd

配置完成之后,主节点运行kubeadm token create --print-join-command,会输出工作节点加入集群的命令,在工作节点上运行一下。